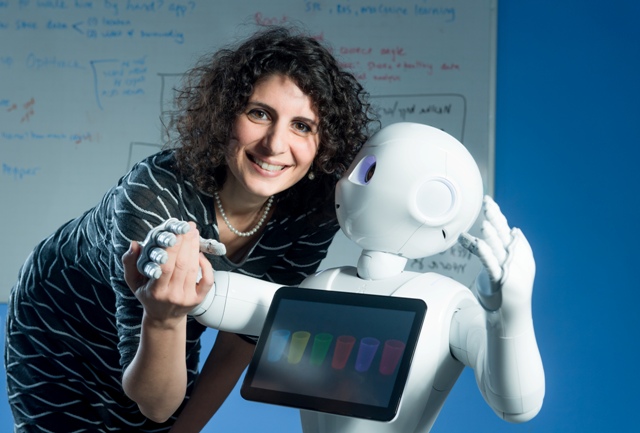

BGU researchers have begun to discover preferences in human-robot interactions and the need to personalize those encounters to fit both the human’s preferences and the designated task.

According to a new study published in

Restorative Neurology and Neuroscience, the researchers tested user preferences when interacting with a robot on a joint movement task, as a first step towards developing an interactive movement protocol to be used as part of a rehabilitation session.

“In the future, human beings may increasingly rely on robotic assistance for daily tasks and our research shows that the type of motions that the robot makes when interacting with the humans makes a difference in how satisfied the person is with the interaction,” according to Dr. Levy-Tzedek. “People feel that if robots don't move like they do, it is unsettling and they will utilize them less frequently.”

In the study, 22 college age participants played a leader-follower mirror game with a robotic arm, where a person and robot took turns following each other's joint movements patterns. When the robotic arm was leading, it performed movements that were either; sharp, like dribbling a ball; or smooth, like tracing a circle.

The study yielded three main conclusions. First, robotic movement primes the human movement. That is, the person tends to imitate the movements of the robot. “This is a very important aspect to consider, for example, when designing a robotic nurse who assists a surgeon in the operating room,” Dr. Levy-Tzedek says. “You wouldn’t want a robotic nurse to make sharp “robotic” movements that will affect the way the surgeon moves her hands during an operation."

Second, there was no clear-cut preference for leading or following the robot. Half the group preferred to lead the human-robot joint movement while half preferred to follow.

According to Dr. Levy-Tzedek, “This finding highlights the importance of developing personalized human-robot interactions. Just as the field of medicine is moving towards customized medicine for each patient, the field of robotics needs to customize the pattern of interaction differently for each user.”

Lastly, the study participants preferred smooth, familiar movements, which resemble human movements, over sharp (“robotic”) or unfamiliar movements when the robot was leading the interaction.

Thus, "determining the elements in the interaction that make users more motivated to continue it is important in designing future robots that will interact with humans on a daily basis,” Dr. Levy-Tzedek says.

The initial research by Shir Kashi won a “best poster award” at the Human-Robot Interaction (HRI) Conference in Vienna in March. This study was a continuation of that research. She completed her undergraduate degree in Cognitive Sciences at BGU, and is now embarking on her graduate studies in the lab.

The study was funded by the Leir Foundation, Bronfman Foundation, Promobilia Foundation, The Israeli Science Foundation (grants # 535/16 and 2166/16). Additional funding was provided by the Helmsley Charitable Trust through the Agricultural, Biological and Cognitive Robotics Initiative and by the BGU Marcus Endowment Fund.