An international group of researchers developed a technique that results in more accurate 3-D scanning for reconstructing complex objects than what currently exists. The innovative method combines robotics and water.

"Using a robotic arm to immerse an object on an axis at various angles, and measuring the volume displacement of each dip, we combine each sequence and create a volumetric shape representation of an object," says Prof. Andrei Scharf of BGU's Department of Computer Science.

"The key feature of our method is that it employs fluid displacements as the shape sensor," Prof. Scharf explains. "Unlike optical sensors, the liquid has no line-of-sight requirements. It penetrates cavities and hidden parts of the object, as well as transparent and glossy materials, thus bypassing all visibility and optical limitations of conventional scanning devices."

The researchers used Archimedes' theory of fluid displacement -- the volume of displaced fluid is equal to the volume of a submerged object -- to turn the modeling of surface reconstruction into a volume measurement problem. This serves as the foundation for the team's modern, innovative solution to challenges in current 3-D shape reconstruction.

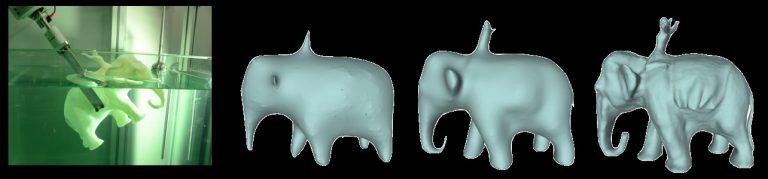

The group demonstrated the new technique on 3-D shapes with a range of complexity, including an elephant sculpture, a mother and child hugging and a DNA double helix. The results show that the dip reconstructions are nearly as accurate as the original 3-D model.

Above: 3-D dip scanner: The object is dipped in a bath of water by a robotic arm. The quality of the reconstruction improves as the number of dipping orientations is increased. Photo: ACM

The new technique is related to computed tomography -- an imaging method that uses optical systems for accurate scanning and pictures. However, tomography-based devices are bulky and expensive and can only be used in a safe, customized environment.

Prof. Scharf says, "Our approach is both safe and inexpensive, and a much more appealing alternative for generating a complete shape at a low-computational cost, using an innovative data collection method."

The researchers will present their paper, "Dip Transform for 3D Shape Reconstruction," during SIGGRAPH 2017 in Los Angeles, July 30 to August 3. It is also published in the July issue of ACM Transactions on Graphics. SIGGRAPH spotlights the most innovative computer graphics research and interactive techniques worldwide.

In addition to Prof. Scharf, who is also affiliated with the Advanced Innovation Center for Future Visual Entertainment (AICFVE) in Beijing China, the other researchers involved include Kfir Aberman, Oren Katzir and Daniel Cohen-Or of Tel Aviv University and AICFVE; Baoquan Chen, Qiang Zhou and Zegang Luo of Shandong University; and Chen Greif of The University of British Columbia.

The research project was supported in part by the Joint NSFC-ISF Research Program 61561146397, jointly funded by the National Natural Science Foundation of China and the Israel Science Foundation (No. 61561146397), the National Basic Research grant (973) (No. 2015CB352501) and the NSERC of Canada grant 261539.